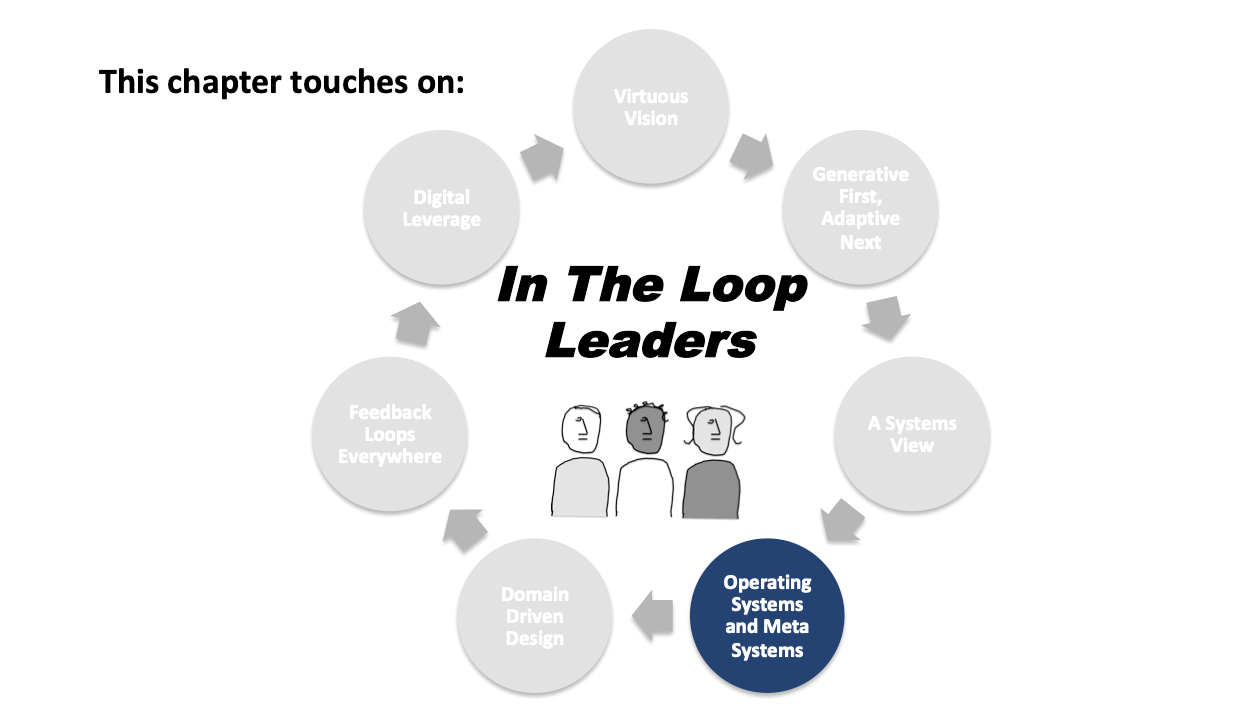

Book Summary notes on The Engineering System chapter

Meta systems exist for only one reason: to improve the performance of enterprise operating systems.

Engineering is a meta system. The engineering system ensures technology built by the enterprise is well built, maintained and operated. It advocates architectural standards used by development teams, promotes common frameworks and mechanisms, establishes standards for enterprise infrastructure, and guides technical domain teams in disciplined agile delivery methods. In essence, it exists to optimize the architecture, infrastructure, tools, methods and outcomes of technology development across the enterprise.

In the fit systems enterprise, the product management system and product discovery system are engines of technology development. But technology may also be developed by technical domain teams in less obvious places — such as within the accounting system, the revenue engine system or the human resource system. Sometimes technology is built to coordinate the flow of information across enterprise systems, following a distributed systems architecture.

Wherever home grown technology is built, the engineering system is at work. Why is this system so important?

Non-technical CEOs often express frustration with the time and resources it takes to develop new software and upgrade existing platforms. Facing pressure from customers and competitors, or the need to upgrade key operating systems, CEOs can mandate to their CTOs and VPs of Engineering and Product, “Just Get It Done Yesterday”. Such decrees frustrate technical teams. Architects and engineers know all too well the layers of considerations that must be addressed to build fit systems. Pressed to the wall and facing unrealistic deadlines, stressed engineering teams may cave — delivering technical systems that have been hacked together and loaded up with technical debt. Un-secure, monolithic, rigid and brittle, such systems fare poorly. Over time the technology portfolio of the enterprise, built to deliver leverage, becomes compromised — a millstone around the neck.

Alan Ngai, principal architect at Box, drew a map of the dysfunctions that can happen when corners are cut in software development. It is a negative reinforcing feedback loop:

Technical systems are judged by their quality attributes. Like all systems, technical systems are made up of stocks, flows and feedback loops. The transformations inside their flows may involve the processing of massive amounts of data, or the execution of complex workloads, or the calling of a web page. But whether the task is simple or complex, technical systems exist to serve people, solve problems and do assigned jobs well. Their value lies in the leverage they deliver humans. They must be available, run efficiently, be secure, recover fast, scale and be modifiable.

The best enterprises have figured out how to build great technical systems. These systems solve problems with speed and quality, yielding competitive advantage. They deliver customers transformative value. They uplift individual productivity, and turn enterprises into learning organizations. They make people better, smarter and faster. They can save lots of money and make lots of money.

But it doesn’t always go well. Many large enterprises are straining to keep up, disrupted by startups and more agile enterprise competitors who are beating them at the digital game. All too often, legacy technical systems are the critical constraint. Poorly developed or outdated technology can take a company’s operations to its knees, constrain growth, cause whole organizations to adopt monolithic command and control structures, and destroy individual productivity. It can make people worse, dumber and slower. It can waste lots of money and precipitate revenue decline. And the resulting technical debt can be a black vortex of hell that sucks joy out of engineers.

Just consider security. In 2017, a security breach at LinkedIn exposed 500M records. For Yahoo in that year 117M records were exposed, for VW it was 100M records, for Equifax 148M records, for T-Mobile 69M records and for Uber it was 57M records. These are highly successful companies with advanced technical systems — and yet they were breached. Needless to say, security has become a first-order concern for architects and engineers.

Security is just one of many concerns for technical teams. The cloud has ushered in virtualization and containerization, and the capacity to scale up and down compute resources based on need. These capabilities require new engineering competencies. With the emergence of IoT and the increase in transactions of all types, the volume, variety and velocity of data has exploded. Data has become more central to all applications. The rise in demand for AI, machine learning and deep learning can be seen in personalization and instant approval features, anomaly detection and robotic process automation. These emerging technical capabilities open the door to new value creation, but they also demand new knowledge and skills. Some companies have leveraged these new capabilities to great effect. Other companies are stuck with monolithic systems, and struggle to keep up.

You know fit technical systems when you see them. Applications and client-specific interfaces are user friendly. They are available the moment you need them. Requested data returns to you immediately and is accurate. Technical workflows work seamlessly, in concert with human workflows. Everything is secure. System modifications can be made quickly when needs evolve.

How does great technology happen? It’s all in the sausage making.

Seven technical advancements have increased the power of technology to transform the enterprise:

- From on premises or co-location computing to cloud computing

- From single machines to clusters of machines (nodes)

- From single core processors to multicore processors

- From expensive RAM to cheap RAM

- From expensive disks for storage to cheap disks for storage

- From slow networks to fast networks

- From individual applications to containerized applications

- From Build Your Own Infrastructure to Infrastructure as a Service

As a result of these breakthroughs, everything is more efficient. Many concurrent users can operate simultaneously in a system. The volume, variety and velocity of data that can be processed is vastly greater. Data can be made available to users instantaneously — with latency in milliseconds. Because infrastructure has become more plug-and-play, more time can be spent designing applications. Machine learning, deep learning and AI can be leveraged to deliver predictive, prescriptive and even cognitive capabilities.

But these capabilities are only of value if you know how to build them into your technical systems.

Domain Modeling and Architecture

Architecture is the starting point for great software. If you are a non-technical CEO, you need to understand what it takes to build great software. This requires that you take a shallow dive into the deep end of the pool — to get a little technical. Hang in there.

The improvement of a system starts with a current state analysis. In the problem domain, you assess its people, workflows, technology and money flows. You identify the bottlenecks, feedback lags and any archetypes (system traps) that may be limiting factors to system health or growth.

Once you have identified the problem, you determine how best to solve it. If you determine you need to build software, you prepare accordingly. You consider the range of technical capabilities available to you. And then you design the best possible solution.

The first step is to model the problem space. If this space exists in your product, the problem space is expressed by a customer-centric problem statement and a hypothesis. If this problem space exists inside an enterprise operating system, you start with the system map — with its stocks, flows and feedback loops. What exactly is the area of interest on the map? What dynamics are at play in terms of people, workflows, technology and money flows?

For instance, let’s take the revenue engine system. You might identify the product platform’s customer onboarding features as the problem domain of interest:

Architects will break the problem down into sub-domains and model the patterns of events that occur inside them. Here, best practice is to follow the principles of domain driven design — as described in Eric Evans’ book, Domain Driven Design: Tackling Complexity in the Heart of Software.¹ This approach to modeling problems and solutions is closely paired to the microservices architecture style.

Sub-domains in the problem space are translated into the solution space (the software architecture space) as bounded contexts. Bounded contexts are the boundaries of the domains within which transformations must occur. You can identify a bounded context by noticing where events happen. In the solution space, the sub-domains and their corresponding bounded contexts must be defined at the application layer, database layer and infrastructure layer based on a holistic understanding of the business and the capabilities of the cloud. This is where the solution design is developed.

In the modeling of the problem and solution, naming conventions are important. Software development can seem to non-technical executives like a “black box”. By creating a model that uses straightforward, domain-specific language, non-technical stakeholders and technical workers can gain shared understanding of the system and the changes that must be made. In the terminology of domain driven design, this common language is called a “ubiquitous language”. It helps all stakeholders understand the meaning of the words used in the model. This helps them evaluate system design and verify that its intended outputs, as designed, do indeed solve the intended problem. As the model is expressed in code, domain-relevant naming conventions also make it easier to organize things and find them.

Andreas Diepenbrock, Florian Rademacher and Sabine Sachweh, in An Ontology-based Approach for Domain-driven Design of Microservice Architectures,² present the following image of a microservice.

The microservice is expressed by a bounded context, which is connected via entities, services and value objects to a corresponding domain model (relevant just to the domain) and to a shared model (able to be shared with other domains; used to communicate between domain models). This modeling methodology can be used to identify important interconnections.

As the model grows, a context map can be developed to show how bounded contexts interact with each other. As teams iterate on the model, their understanding of the business logic inside the domain rises and the model improves. This evolving model informs the architectural design.

In domain driven design, the idea is to model only those things that are directly relevant to the software you need to develop. Everyone on the team understands that the model is dynamic; it will mature over time. Best practice is to model quickly and then start coding. Coding improves the model. Best practice development teams keep the model and the code in close alignment as both evolve.

Detailed Architecture and Design

So how do you gain the benefit of today’s cloud capabilities? For most technical solutions in the enterprise it requires that you adopt the reactive microservices architecture style. Now to be clear, the microservices style doesn’t make sense in an early stage startup. It requires too much overhead. In the early going, you will build the simplest possible stack to deliver the required functionality. By definition, it will be a monolith. But once you prove product / market fit and begin to scale, you’ll approach the point where it’s time to refactor your technology into microservices.

Domain driven design goes hand and glove with reactive microservices architecture. If your existing technology is not built based on microservices architecture and you are at enterprise scale, your technology is a monolith. Monoliths are usually bad, because everything is connected together — a change to one component requires the entire system to be updated. This makes system failures more difficult to fix, and changes more difficult to implement. Monoliths slow the entire enterprise down.

Microservices architecture is built on the principle of isolation. Services are created to do one thing, and one thing well. This simplifies operation, reduces the impact of service failure and makes it easier to add, change or delete a service in the application over time. To address modern data needs, microservices need to be reactive.

We will address the subject of reactive microservices architecture more completely in Chapter 24.

Mechanisms

The engineering system is supported by mechanisms. Mechanisms simplify development and delivery processes. They include (with contributions from Wikipedia):

- Plan: architecture styles, reference architectures, architecture tools, frameworks, libraries, directories, toolkits, SDKs, languages, APIs

- Create: code development and review tools, source code management tools, continuous integration tools, build status tools, security tools

- Verify: Testing and QA tools, security tools

- Package: artifact repository, application pre-deployment staging tools

- Release: change management tools, release approval and automation tools

- Configure: configuration and management tools, infrastructure as code tools

- Monitor: application performance monitoring tools, threat detection and authentication tools

In architecture design, common patterns are addressed through reference architectures. Reference architectures are like the “build your own house” architecture plan books you can buy. They guide architects and engineers to well thought through designs that solve common problems.

Here is an example of a reference architecture from AWS, addressing fault tolerance:

Reference architectures save time because they provide a starting point for your design. A healthy engineering system will leverage reference architectures to avoid reinventing the wheel.

A framework provides a package of software that can be selectively changed with user-written code. The result is software that is application-specific. Frameworks differ from libraries in that they invert control. With a script in a library, the developer is in control. With a framework, the framework dictates the flow of control. A framework may encompass toolkits, code libraries, compilers and APIs necessary to enable the development of a system. Play (often paired with the toolkit Akka) is a framework.

Libraries consist of pre-written code, formulas, procedures, scripts, classes and configuration data. A developer can add a library to a program and point to the required functionality in the library, without having to write code for it.

A directory is a file system that stores hierarchical information about objects on a network. For instance, Microsoft’s Active Directory stores objects such as servers, printers and network user and computer accounts (logins, passwords, etc.). Directories are used for authentication and routing.

A toolkit is a single utility program. It includes software routines used to develop and maintain applications. Akka is a toolkit.

APIs are the interfaces that connect application programs together. REST is an API. There are also JSON-based APIs. APIs play a central role in microservices architecture.

Programming languages are formal languages with syntax and semantics. They are used to write programs that execute instructions that produce outputs via algorithms. C++ and Scala are languages.

Tools exist to architect, test, QA, stage, change, release, automate, configure, manage and monitor systems. Tools support every step of the software development and maintenance journey.

Reference architectures, frameworks, toolkits, APIs, directories, languages and tools are the mechanisms used by architects and engineers to design, develop and maintain technical systems. The engineering system promotes and propagates mechanisms to development teams throughout the enterprise. These mechanisms are effective when employed in a well thought through architecture, executed using proper practices. Proof of effectiveness is software, built efficiently, that does the job.

Technical Domain Teams

In the fit systems enterprise small, cross-functional, autonomous development teams are responsible for developing technical systems inside domains (for more on this, reread Chapter 7). These teams are organized around business domains, not technical functions. In a legacy technical organization design, one might find a UI team, a server team and a database team. Not so in the fit systems enterprise.

Here, the domain reflects a business domain. Within a B2B SaaS product, the domain to which a technical team is assigned might be a feature — such as onboarding, or the catalog, or search, or pricing, or shipping. Or it could be a client — such as desktop, Android or iOS. Inside each technical domain, the user experience, the compute infrastructure and database management are all under the responsibility of the team. There may be infrastructure teams, but they exist to support and make more efficient the feature and client teams.

Feature teams might include a product manager, architect, agile coach or delivery manager, data analyst and multiple developers. The product manager’s job is to translate the business outcome objective of the domain into feature-specific jobs that must be done. The product manager is usually the working leader of a technical team. Teams responsible for user features need designers. The design function is critical on technical development teams. Some teams may need expert algorithmists who can solve complex math problems. Others may need system architects who can string together distributed systems. The specific makeup of a technical domain team will depend on the technology the team needs to support.

In the beginning of a tech company, there is just one domain — occupied by perhaps one engineer and a product manager / CEO. But as the company scales, domains and domain teams proliferate like fractal geometry. At scale, scores of domains may be formally defined, each assigned to an application team, client interface team or infrastructure team — as was done at Spotify. Teams are broken into feature squads (applications), container squads (client interfaces) and infrastructure squads (database and back end systems), as Spotify did:

At the infrastructure level, engineering system teams build and maintain the tools and technology to support applications and client interfaces. Networks are secure and efficient. Databases are maintained and accessed at low cost and high availability. The cloud is efficiently leveraged. Maintenance is straightforward and efficient. It’s easy to detect failure, to heal and recover, and to manage computing resources. New deployments are easy and safe — no risk of major system failure. Introducing changes into existing infrastructure doesn’t require elite technical skill or extensive tribal knowledge.

Henrik Kniberg and Anders Ivarsson, in their 2012 video “Scaling Agile @ Spotify”, describe the interaction between development squads and infrastructure operations teams as follows:

“A common source of dependency issues at many companies is development vs operations. Most companies we’ve worked with have some kind of a handoff from dev to ops, with associated friction and delays. At Spotify there is a separate operations team, but their job is not to make releases for the squads — their job is to give the squads the support they need to release code themselves; support in the form of infrastructure, scripts, and routines. They are, in a sense, ‘building the road to production’. It’s an informal but effective collaboration, based on face-to-face communication rather than detailed process documentation.”³

In general, non-specialization is better than specialization. A developer competent at all the technologies noted below is way more valuable than a developer only competent in one:

- Ruby

- Java

- iOS

- Android

- SQL and non-SQL databases

- Testing

- Object Oriented design

- Node.js

- HTML / CSS / JavaScript

When you have too many specialists, utilization goes down due to the variation in needs over time. And there is a big communications overhang required to maintain alignment. A team that includes master developers who are expert at 3–4 technologies and competent in 7–9 can be much more efficient and effective than a team with individual experts for each technology.

As a rule, it is best for the membership of teams to remain fairly consistent. It also makes sense whenever possible for teams to work physically in the same space. It takes time for teams to bond; continuity and proximity help.

Methods

Methods are also key in the engineering system. Methods are improved when teams are disciplined and seek continuous learning. One of the most important jobs of VPs of Engineering and Product is to ensure the engineering system builds best practice methods within all domain teams.

In the fit systems enterprise, teams build and maintain software following the principles of Disciplined Agile Delivery (DAD)⁴. DAD was developed by Scott Ambler as a more disciplined evolution of the agile model. It draws from lean /agile / scrum / kanban philosophies and the principles of the Rational Unified Process to present a tightly managed development process.

In DAD, work is broken down into a light inception process at the beginning, a light transition process at the end and a more intensive construction process in the middle.

The goal in the inception phase is to understand the business problem to be solved and provide the minimum architecture necessary to guide initial construction. On the one hand, it avoids the “heavy upfront” approach of the waterfall method. But on the other hand, it allows for enough up-front design to model the problem well, and to draw a basic system architecture.

In the construction phase, the goal is to produce a potentially consumable solution in every iteration — one that addresses stakeholder needs. The team builds component by component, feature by feature, in sprints — referred to as iterations in DAD. Coding informs the evolving model. The construction phase is the longest phase of a project.

Finally, in the transition phase, solutions are confirmed to be consumable and are prepared for release. Throughout the project, the goal is to fulfill the mission, grow team members, improve the process, address risk, coordinate activities and leverage and enhance existing infrastructure.

Here is Ambler’s summary of the stages in DAD, and the goals for each:

DAD brings greater rigor to the inception phase of visioning, modeling and architecting, while keeping it lightweight. This is an important expansion of traditional agile / scrum philosophy. Agile developed as a reaction to waterfall, with its heavy front-end-loaded planning and contracting. DAD rebalances agile, acknowledging that work is still required at the front end of the project to get basic system architecture right. The resulting systems development life cycle looks like this:

Notice that this is a system map. It represents the engineering workflow. It includes stocks (work items, iteration backlog, tasks), flows (envision the future, iterations, daily work), and feedback loops (funding & feedback, enhancement requests and defect reports). We could easily add a layer for “workers” above this, defining specific roles; and a layer below it, defining the “technology” (as shown in the mechanisms listed in the previous section under “Plan, Create, Verify, Package, Release and Monitor”). And the “money flows” could be built out more explicitly. This Disciplined Agile Delivery lifecycle system map is powerful because it adds discipline to the traditional agile workflow.

The system as shown is designed for efficiency and quality. A list of initial requirements and a release plan is developed during the inception stage, along with the initial modeling and high-level architecture. Work proceeds based on iterations (sprints), with daily coordination meetings (scrums). Regular retrospectives and demos to stakeholders keep the project aligned with stakeholder needs. Enhancement requests and defect reports feed the backlog with new work items.

In “Going Beyond Scrum”, Ambler predicts that over time inside a domain team the inception and transition phases will shrink as the model and architecture matures, and the team will focus more and more on the construction phase — the world of continuous delivery. Like this:

With continuous delivery, a single developer might release new code into production as often as twice a day.

When the product road map is changed, and during periods of remodeling and architectural renewal, the length of the inception and transition phases will expand again. As teams return to periods of architectural stability, these two phases will shrink again. For most teams it is likely that the contraction-expansion cycle will episodically recur over time.

More about the Cloud

Proof of engineering system health is seen in technical systems that are:

- Responsive (available to users when needed)

- Operationally excellent (efficiently deliver intended business value and can be efficiently and continuously improved)

- Secure (executes risk assessments and mitigations to ensure information, assets and business value are protected)

- Resilient (can self-heal, quickly recovering from disruptions resulting from component failures, misconfigurations or issues with the network)

- Elastic (can access computing resources in the cloud as required to meet demand, whether low, medium or high)

- Modifiable (as architectures and technologies evolve, can be continuously refactored and upgraded)

- Efficient (are designed for efficiency; and are efficiently implemented, maintained and modified)

- Cost optimized (are designed to use the minimum necessary cost-driving resources at the lowest possible price point)

For most technical systems, the architect must balance a set of tradeoffs between these quality attributes. Which matters most — minimizing system latency (eventual data consistency) or maximizing data accuracy (strong data consistency)? What dependencies should be incorporated inside a service, versus remaining outside of it? How secure must the system be? How does the cost of cloud compute utilization compare to the value generated from the computing? The questions to be answered are many.

The cloud has emerged to reduce (but not eliminate) the cost of these tradeoffs. AWS⁵, identified six principles to follow to better leverage the cloud in your system architecture:

Stop guessing your capacity needs

Eliminate guessing about your infrastructure capacity needs. When you make a capacity decision before you deploy a system, you might end up sitting on expensive idle resources or dealing with the performance implications of limited capacity. With cloud computing, these problems can go away. You can use as much or as little capacity as you need, and scale up and down automatically.

Test systems at production scale

In the cloud, you can create a production-scale test environment on demand, complete your testing, and then decommission the resources. Because you only pay for the test environment when it’s running, you can simulate your live environment for a fraction of the cost of testing on premises.

Automate to make architectural experimentation easier

Automation allows you to create and replicate your systems at low cost and avoid the expense of manual effort. You can track changes to your automation, audit the impact, and revert to previous parameters when necessary.

Allow for evolutionary architectures

Allow for evolutionary architectures. In a traditional environment, architectural decisions are often implemented as static, one-time events, with a few major versions of a system during its lifetime. As a business and its context continue to change, these initial decisions might hinder the system’s ability to deliver changing business requirements. In the cloud, the capability to automate and test on demand lowers the risk of impact from design changes. This allows systems to evolve over time so that businesses can take advantage of innovations as a standard practice.

Evolve architectures using data

In the cloud you can collect data on how your architectural choices affect the behavior of your workload. This lets you make fact-based decisions on how to improve your workload. Your cloud infrastructure is code, so you can use that data to inform your architecture choices and improvements over time.

Improve through game days

Test how your architecture and processes perform by regularly scheduling game days to simulate events in production. This will help you understand where improvements can be made and can help develop organizational experience in dealing with events.

Summary

The engineering system is a socio-technical system that is fit and healthy when development teams are developing and releasing well architected software efficiently and well. The healthy engineering system continuously uplifts the teams who execute software development: their culture, capabilities, human competency, mechanisms and practices. It maintains enterprise architecture and infrastructure for distributed systems. And it provides a communication bridge between non-technical and technical leaders. As a result, leaders are aligned on development priorities. In development, time to production is fast, the rate of release failure is low, bug fixing is easy, failure is contained, recovery is immediate, the power of the cloud is leveraged and technical systems can be easily modified and updated.

As tech startups scale past the Minimum Viable Scaling stage and head towards Minimum Viable Expansion, and as the number of product managers, engineers and development teams grows, the engineering system becomes a gating factor in success. For the large legacy enterprise that lacks roots in technology but seeks to digitize its product lines or undergo digital transformation, a healthy engineering system is mission critical.

Refernce:

https://medium.com/ceoquest/in-the-loop-chapter-15-the-engineering-system-df16848a2c77